Infrastructure & System Support (ISS) Department

Scientific Computing Systems enables data-intensive workflows and computing pipelines for SLAC’s research facilities and experiments. The department is responsible for developing and supporting a portfolio of data-centric HPC platforms and services using common shared infrastructure. A key aspect of the Scientific Computing Systems strategy is providing baseline capabilities for the entire user community. The Scientific Computing baseline is a powerful incentive for driving lab-wide collaboration and seeding new lab initiatives. The department seeks continual engagement with users, PIs, senior leadership and business managers. Scientific Computing Systems is shaped and driven by lab requirements. We are considered a research partner and we are always willing to engage and consult.

The SCS department manages the three main research computing infrastructures at the lab: the SLAC Science Shared Data Facility (S3DF), the US Data Facility for the Rubin’s Observatory (USDF), and the Controls & Data IT System for LCLS (CDS). Over the next couple of years, SCS main goal will be integrating these systems and utilizing a shared set of redundant core services for all three infrastructures.

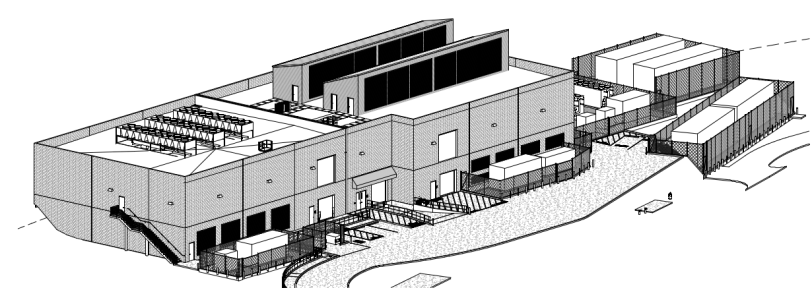

The S3DF is a compute, storage and network architecture designed to support massive scale analytics required for LCLS-II, CryoEM and Machine Learning. S3DF effectively replaces several outdated SLAC systems. S3DF is considered an all-new environment that serves as a greenfield for modern technologies: storage, containerization, interactive workflows, data management, identity and access. The S3DF infrastructure is optimized for data analytics and is characterized by large, massive throughput, high concurrency storage systems. Over the next decade, S3DF will deploy a few PFLOPS of CPU computing, tens of PFLOPS of GPU computing, hundreds of petabytes of fast storage, and more than two exabytes of archiving capabilities.

Key Competencies

Networking & Core services

The combined S3DF/USDF infrastructure will need to process several terabytes per second of traffic. SCS is designing an advanced Ethernet/Infiniband based network to handle the internal traffic, the connections to the various experimental facilities within the lab, the connection to SLAC core, and, eventually, to ESnet for moving data to/from the DOE High End Computing facilities (NERSC, OLCF, ALCF) and to the experimenters’ home institutions.

Storage

The combined S3DF/USDF storage is projected to host multiple exabytes of science data. For the next generation storage system for SLAC, SCS is architecting a heterogeneous system consisting of tape archive capabilities, spindle-based and flash-based systems with POSIX and object store interfaces. An advanced data management system will handle data movements across the various layers based on the experimenters’ usage patterns.

HPC Platforms

SCS is building the platforms supporting the core services required to operate the S3DF/USDF. These include network services, authentication and authorization, logging and monitoring, and storage for users’ homes and software repos. All services will be deployed in a high availability environment utilizing modern technologies, like containers and virtual machines, and advanced network methodologies, like anycast.

Science Applications

Several experimental teams will run their analysis pipelines in the S3DF infrastructure. The role of the science applications team within SCS is to engage with the different experimental teams to optimize their workflows within the existing infrastructure and deploy any new technology that may be required by that specific application through an iterative process. More in general, the science application team will design, deploy, support, and advise on batch job submission techniques, interactive analysis tools, web hosting, workflow tools, and database technologies.